Transparenz: Um diesen Blog kostenlos anbieten zu können, nutzen wir Affiliate-Links. Klickst du darauf und kaufst etwas, bekommen wir eine kleine Vergütung. Der Preis bleibt für dich gleich. Win-Win!

When your own voice becomes a weapon: The rise of AI-powered audio fraud

For a long time, phishing emails with poor grammar were the primary tool of cybercriminals. But with the rapid advancement of generative artificial intelligence (AI), the threat potential has changed dramatically. Today, a short audio snippet is enough to clone an ordinary person’s voice with uncanny realism.

This phenomenon, known as “voice cloning” or “deepfake audio,” is revolutionizing scams like the classic “grandchild scam.”

Topic Overview

- Historic SoftBank deal & starting signal for EU regulation: The AI news on New Year’s Eve 31. December 2025

- When your own voice becomes a weapon: The rise of AI-powered audio fraud 26. December 2025

- December 26, 2025: Record workload during the holidays & final sprint in the mega-deal 26. December 2025

- Ad-free home network: Install Pi-hole on Windows 23. December 2025

- December 23, 2025: $900 billion valuation in sight & Google’s Flash launch 23. December 2025

The technology: Three seconds are enough

Previously, software programs required hours of audio material to create a synthetic voice. Modern AI models have broken through this barrier. Microsoft’s VALL-E or commercial tools from startups (originally designed for dubbing or audiobooks) can now convincingly imitate voices with an audio sample of just three seconds.

The AI analyzes not only the pitch, but also the speech rhythm, intonation, and even emotional nuances. The result is a synthetic voice capable of saying sentences the real person never uttered.

Where the fraudsters got your vote from

Criminals don’t need to perform complex hacks to obtain the necessary voice recordings. The sources are often publicly accessible:

Social Media: This is the most productive source. A short video in an Instagram story, a TikTok clip, or a Facebook vacation video in which you speak often provides sufficient material.

Answering Machine: Your personal voicemail greeting is often a clean, noise-free recording of your voice.

Spam Calls: Criminals call under false pretenses (e.g., fake surveys) to engage the victim in conversation and record their voice.

Spam Calls: The Jennifer DeStefano Case: A prominent example from the USA illustrates the danger. Jennifer DeStefano received a call in which she heard her 15-year-old daughter crying and screaming, followed by the voice of a man demanding ransom. The daughter’s voice was an AI clone. The real daughter was safely on a skiing trip.

The fraud scenarios

Once the voice is cloned, it is used for various types of fraud:

1. The Grandparent Scam 2.0 (Shock Calls)

The classic grandparent scam relied on the victim believing they were hearing their relative due to poor connection quality or mumbling. With AI, the caller now sounds exactly like the grandchild, child, or partner. The stories are dramatic: a car accident, an arrest, or a kidnapping. The shock and the familiar voice override the victim’s rational thinking.

2. Bypassing security barriers

Some banks and service providers use “Voice ID” for authentication on the phone. Security researchers have already demonstrated that AI-generated voices can bypass these biometric security gates.

3. CEO fraud (business fraud)

In this type of fraud, accounting staff receive calls from someone claiming to be their boss and are instructed to make urgent transfers to other people’s accounts. A particularly spectacular case occurred in 2019 when the CEO of a British energy company was defrauded of €220,000 by an AI-generated voice.

Statistics and risk perception

A McAfee study (“The Artificial Imposter,” 2023) already provided alarming figures at that time:

- 53% of adults who participated in the study stated that they had shared their own voice online (through social media, etc.).

- 70% of respondents were unsure whether they could distinguish an AI voice from a real voice.

- Globally, one in four respondents stated that they had already experienced AI voice fraud (either personally or through someone they knew).

The Federal Trade Commission (FTC) in the USA also explicitly warns of the rise of this type of fraud and reports annual losses in the millions due to “imposter scams.”

How to protect yourself

Experts and authorities recommend the following protective measures:

The Family Safe Word: Agree on a safe word with close relatives. If a caller claims to be in distress but doesn’t know the word, it’s a scam.

Hang Up and Call Back: If a supposed relative or supervisor calls from an unknown number and demands money: Hang up. Call the person back using a number you know and have saved.

Social Media Hygiene: Check your privacy settings. Set profiles to “Private” so strangers can’t access videos of you.

Social Media Hygiene: Be skeptical of payment requests: Be extremely suspicious if money is requested via gift cards, cryptocurrencies or cash handover.

The so-called democratization of AI tools has meant that sophisticated cyber weapons are now available to ordinary fraudsters. Trust in what we hear is fundamentally shaken. Education and changed behaviors (such as the use of a code word) are currently the most effective lines of defense against this invisible threat.

Quellenangaben

- McAfee Corp. The Artificial Imposter: Cybercriminals use AI cloning to scam families.

- Federal Trade Commission (FTC). Consumer Alert: Scammers use AI to enhance their family emergency schemes.

- CNN. (2023). Bericht über den Fall Jennifer DeStefano und KI-Kidnapping-Betrug.

- The Wall Street Journal. Fraudsters Used AI to Mimic CEO’s Voice in Unusual Cybercrime Case.

Search for:

You might also be interested in:

Latest Posts:

- Historic SoftBank deal & starting signal for EU regulation: The AI news on New Year’s Eve

- When your own voice becomes a weapon: The rise of AI-powered audio fraud

- December 26, 2025: Record workload during the holidays & final sprint in the mega-deal

- Ad-free home network: Install Pi-hole on Windows

- December 23, 2025: $900 billion valuation in sight & Google’s Flash launch

- How to tune your FRITZ!Box into a professional call server

About the Author:

Beliebte Beiträge

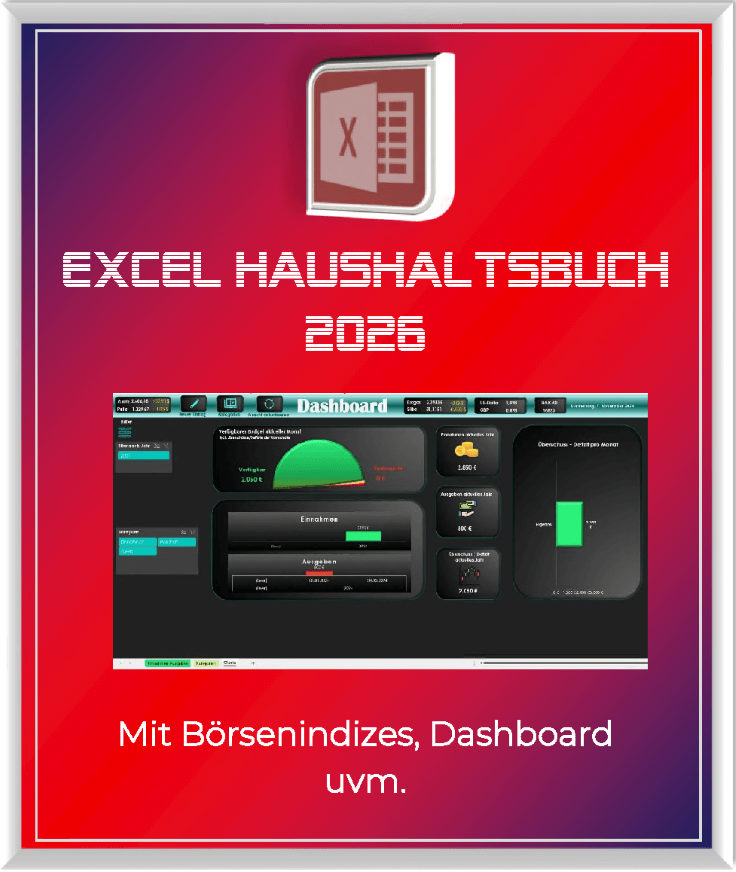

Create vacation planner in Excel

Michael2025-12-07T08:05:08+01:00September 15th, 2021|Categories: Microsoft Excel, Microsoft Office, Office 365, Shorts & Tutorials, Uncategorized|Tags: Excel, Excel Calendar|

We explain how you can create your own vacation planner 2022 in Microsoft Excel. And of course with a display of public holidays and weekends.

Create annual calendar 2022 in Excel

Michael2023-06-03T16:37:45+02:00September 14th, 2021|Categories: Microsoft Excel, Microsoft Office, Office 365, Shorts & Tutorials|Tags: Excel, Excel Calendar|

In our tutorial we describe how you can create an annual calendar for 2022 with a display of the calendar week and public holidays in Excel, and use it anew every year.

Create individual charts in Excel

Michael Suhr2023-06-03T16:41:04+02:00September 2nd, 2021|Categories: Microsoft Excel, Microsoft Office, Office 365, Shorts & Tutorials|Tags: Excel, Excel tables|

Charts are created quickly in Microsoft Excel. We explain how you can customize them, and also swap (transpose) the axes.

Create professional letter templates in Word

Michael Suhr2025-12-07T08:05:13+01:00June 9th, 2021|Categories: Microsoft Word, Microsoft Office, Office 365, Shorts & Tutorials|Tags: letters, Word|

How to create a professional letter template with form fields in Microsoft Word, and only have to fill in text fields.

Create a digital signature in Outlook and Word

Michael Suhr2023-06-03T10:58:17+02:00April 19th, 2021|Categories: Microsoft Outlook, Microsoft Office, Microsoft Word, Office 365, Shorts & Tutorials, Uncategorized|Tags: Outlook, Word|

Create a digital signature in Microsoft Outlook and Word for more security.

Create a Table of Contents in Word

Michael Suhr2023-06-06T11:43:33+02:00April 6th, 2021|Categories: Microsoft Word, Microsoft Office, Office 365, Shorts & Tutorials, Uncategorized|Tags: letters, Word|

To create a dynamic table of contents in Microsoft Word - Office 365